It is wrong always, everywhere, and for anyone, to believe anything upon insufficient evidence.

William Clifford, “The Ethics of Belief”

In the great boarding-house of nature, the cakes and the butter and the syrup seldom come out so even and leave the plates so clean. Indeed, we should view them with scientific suspicion if they did.

William James, “The Will to Believe”

The idea that the practice of medicine should be based on evidence strikes most laypersons as trivially true. An expert’s claims differ from the opinions of a nonexpert precisely because the former enjoy a certain evidential support that the latter lack. In this respect, the emergence of evidence-based medicine (EBM) circa 1992 as a “new paradigm” implies a worrisome question to most people: If medical professionals are now encouraged to make claims (diagnoses, prognoses, therapeutic recommendations, etc.) on the basis of evidence, what were they doing before 1992 [1]? Of course, upon a closer examination, one learns that EBM is less of a revolution than an urging that medical practitioners guide their clinical judgments on the basis of the best available clinical research—most often, the results of randomized clinical trials (RCTs) representing the “gold standard” [2].

Throughout the discussion on the nature and merit of EBM, the questions of what constitutes evidence and how it relates to how we should act and think remain largely unexplored. Indeed, both advocates and opponents of EBM have assumed that we have a relatively unproblematic understanding of evidence. Critics of EBM have argued that EBM places too great an emphasis on data derived from RCTs while ignoring non-RCT evidence that can be clinically useful [3]. But the disagreement here concerns the scope of clinically relevant evidence and not the nature of evidence per se. From a philosophical point of view, however, evidence and its logical relationship to theories and judgments represent one of the most perplexing puzzles in the philosophy of science.

An Abbreviated History of Evidential Reasoning

David Hume’s argument against the rationality of inductive reasoning arguably marks the start of a critical examination of evidential reasoning—that is, forming beliefs on the basis of evidence—in the modern era [4]. Hume argued that empirical generalizations on the basis of past observed evidence must rely on the principle that nature behaves in a uniform fashion; that is, all else being equal, future events will resemble past instances of the similar sort. The problem, however, is that if we attempt to justify this principle of uniformity of nature by appealing to evidence of its past success, we risk justifying induction inductively. To put it another way, if one questions the rationality of inductive reasoning, appealing to inductive reasoning to answer that worry can hardly bring any reassurance. It is analogous to allaying one’s concern of someone’s trustworthiness by asking the person if he can be trusted.

Hume left empiricists and admirers of science with a challenge: Make sense of the apparent success and superiority of evidential reasoning in the face of the argument that it is not rationally justifiable.

Impressed by advances made in mathematics, logic, and physics in the early twentieth century, logical positivists such as Rudolf Carnap and Carl Hempel attempted to formalize the logic of confirmation and the logic of science in general. The implicit assumption behind the logical positivists’ project was that observations supply theory-neutral evidence that can adjudicate scientific disagreements. The burst of scientific progress in the early twentieth century, the positivists believed, was the product of rigorous adherence to the scientific method. Rather than addressing Hume’s fundamental challenge to the rationality of induction, the positivists focused on explicating the logic of induction. They argued that the success of modern science should provide prima facie justification for evidential reasoning.

The logical positivists were followed by theorists like Karl Popper, who agreed that there is a logic to the scientific method but argued that this logic was not about confirming theories by collecting confirming evidence—rather it was about attempting to falsify theories. In Popper’s view, what distinguished Einstein’s general relativity from Marx’s theory of history was not that the former enjoyed evidential support while the latter did not. Popper argued that proponents of Marx’s theory of history could find evidence wherever they looked; supporting evidence, it turns out, can be acquired too easily. The real separation between these two theories was that Marx’s theory of history was not falsifiable; there was no possible evidence one could find to refute the theory. Therefore, it was unscientific. Popper’s view of what constituted scientific evidence depended on a clear concept of falsification, on the ability to logically refute a hypothesis on the basis of a contrary piece of evidence.

Most philosophers today believe that evidence provides support for theories. One might define confirming evidence as follows:

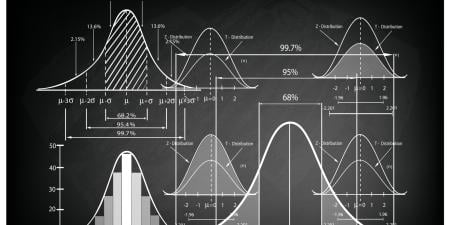

P(H/e+) > P(H)

That is, the probability of a hypothesis H being true given evidence e+ is greater than the probability of the hypothesis alone being true.

Moreover, a piece of evidence can also be used to disconfirm a hypothesis. Contrary evidence in this case would lower the probability that the hypothesis is true. More precisely, if e- is a piece of disconfirming evidence, the relationship can be represented as:

P(H/e−) < P(H)

These two conditions appear to be necessary for any full analysis of the concept of evidence. However, the crucial question for those who are interested in the logic of confirmation (and disconfirmation) regard the conditions under which these definitions are true.

The optimistic belief that philosophers would eventually uncover the logic of evidential reasoning began to wane in the second half of the twentieth century. As reasons for this change, a number of philosophers have offered a variety of arguments challenging the possibility of an objective logical relationship between evidence and theories. Below are two well-known arguments.

Thomas Kuhn’s Paradigms

In The Structure of Scientific Revolutions, Kuhn combined a rigorous examination of the history of scientific revolutions with a critical analysis of the logic of science. According to Kuhn, normal science consists of attempts to solve specific problems in accordance with the accepted paradigm of the time. A paradigm specifies among other things what constitutes well-formulated puzzles, acceptable solutions to those puzzles, and properly ignorable abnormalities. Puzzle-solving exemplars identified by the paradigm provide the models that practitioners of science should emulate. Moreover, as scientific revolutions replace one paradigm with another, the criteria that practitioners use to evaluate the support an observation offers for a theory also change.

Take, for example, Newton’s concept of gravitational attraction, which entails action at a distance. Lack of an explanation that involved direct physical contact would have rendered it a nonstarter in a pre-Newtonian paradigm. But as Newtonian mechanics became the dominant paradigm, explaining action at a distance ceased to be a puzzle that demanded attention. The failure to explain away action at a distance no longer represented a defect in a proposed solution to a research problem or counted as a piece of evidence against the plausibility of the solution. What constituted acceptable evidence changed based on the new paradigm. Other examples of such “paradigm shifts” include Copernicus’ postulation that the sun, rather than the Earth, is at the center of our solar system and Einstein’s theory of relativity.

Most Western medical professionals subscribe to roughly the same paradigm: Diseases and disabilities stem from morphological, chemical, or genetic causes. Furthermore, there is a general agreement in terms of what qualifies as evidence (e.g., RCTs, cell biology, organic chemistry, and so on). The supportive strength of a given piece of evidence is also largely uncontroversial. RCTs, for instance, are generally thought to provide more support than other types of studies designed to answer the same question.

Nevertheless, across different paradigms, the evidential support of a particular observation can vary significantly. A Chinese physician who explains diseases and disabilities in terms of improper flow of qi would not look at a tumor mass as evidence of cancer. Indeed, the very description of the observation would be different. There is no way of defining qi without a fairly robust acceptance of an entirely different medical approach. In other words, there is no way to incorporate qi into Western medical paradigms without a fundamental change in the accepted paradigm. I am not endorsing here the soundness of Chinese medicine, to be sure. However, this example demonstrates that the idea of evidence serving as neutral arbiter of choosing theories is simply incompatible with Kuhn’s view that evidence cannot be evaluated in a paradigm-independent manner. The appearance of irrationality or quackery can only be measured from the point of view of one’s accepted paradigm. There is, in other words, no appeal to evidence that does not rely on an accepted paradigm.

Willard Van Orman Quine and the Role of Psychology

No other philosopher played as important of a role in the Anglo-American analytic philosophy tradition in the latter half of the twentieth century as did Quine. In “Two Dogmas of Empiricism,” Quine argued forcefully that logical truths and empirical truths differ only in degree [5]. The picture that Quine paints is essentially this: Our understanding of the world is based on an interconnected web of beliefs. Sitting in the core of this web are firmly held beliefs such as the law of noncontradiction (i.e., contradictory claims are never true), some fundamental claims of physics (e.g., gravity), and so on. As one extends to the periphery of the web, beliefs become less significant in the sense that a rejection of one of these beliefs requires only a minor revision in the web to restore logical consistency.

Suppose one observes that a patient receiving benzodiazepine to treat anxiety has instead become more anxious. How would one reconcile that against one’s expectation? According to Quine, when there is a disturbance to our web of beliefs, we revise our web in the least cognitively taxing manner. In other words, we revise our peripheral beliefs before we revise our core beliefs.

In the case of the unexpected effect of benzodiazepine, one might choose to abandon one’s belief that the pharmacokinetics of the drug is fully known. Alternatively, one could revise a core belief to accommodate the observation. For example, one might conclude that anxiety (and perhaps other psychobehavioral disease) is not caused by biochemical processes affected by benzodiazepine—but this latter revision strikes us as implausible. The cognitive price it would require, its effect on so many other beliefs, would be much higher than merely believing that the drug’s function is not fully known. Nevertheless, Quine insists that such a revision would not be impossible or necessarily incorrect.

In the history of science, there have been numerous occasions when core beliefs have been revised (e.g., the Copernican revolution). Why and when individuals and the community decide to make the deep revision is a matter of sociology and psychology—is an individual or a society ready to make the cognitive leap it takes to believe something new? It is perhaps here that Kuhn’s insight into scientific revolutions becomes relevant. The important point is that the relationship between evidence and theory may hinge more on psychology than on logic or the pursuit of objective truth.

Why Defining Evidence Matters for Clinicians

I have selected these arguments from the tradition of analytic philosophy to raise some doubts that anyone can rely on an unproblematic concept of evidential support. Medical professionals ought to appreciate the complexity of the concept of evidence as outlined by philosophers in the past 50 years.

It is important to remember these arguments when acting on various types of evidence in clinical settings. Insisting like William Clifford, who is quoted at the beginning of this article, that no judgment be made without sufficient evidence constitutes the adoption of a principle that relies on deeply problematic concepts [6]. Rather, clinicians would be wise to remember that evidence rarely comes out so even or clean.

References

-

For a critical examination of EBM’s use of “paradigm shift” see Couto J. Evidence-based medicine: a Kuhnian perspective of a transvestite non-theory. J Eval Clin Pract. 1998;4(4):267-275.

-

One of the earliest articulations of EBM can be found in Evidence-Based Medicine Working Group. Evidence-based medicine: a new approach to teaching the practice of medicine. JAMA. 1992;268(17):2420-2425.

- Feinstein AR, Horwitz RI. Problems in the “evidence” of “evidence-based medicine”.. Am J Med. 1997;103(6):529-535.

-

Hume D. An Enquiry Concerning Human Understanding. Beauchamp T, ed. Oxford: Oxford University Press; 2006: sec IV.

-

Quine W. From a Logical Point of View: Nine Logico-Philosophical Essays. Boston: Harvard University Press; 1980.

-

Clifford W. The ethics of belief. In: Madigan T, ed. The Ethics of Beliefs and Other Essays. Amherst, NY: Prometheus; 1999.