Abstract

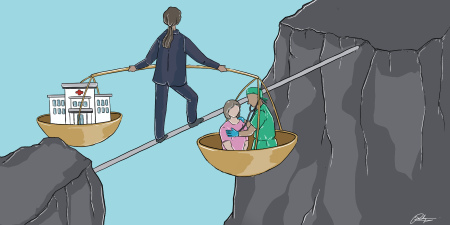

Managing risk in cases that involve the use of clinical decision support tools is ethically complex. This article highlights some of these complexities and offers 3 considerations for risk managers to draw upon when assessing risk in cases using clinical decision support: (1) the type of decision support offered, (2) how well a decision support tool helps accomplish work that needs to be done, and (3) how well values embedded in a tool align with patients’ and caregivers’ professed values.

Decision Support

Clinical decision support systems are computerized systems designed to assist clinical decision making about an individual patient.1 Although they offer a number of benefits to clinicians and patients, they have also been recognized as introducing new risks into clinical work.2,3 In this article, I describe 2 general types of clinical decision support systems—tools that augment human capabilities and tools that offload clinician work—and assess risks posed by each. I then offer 3 considerations to take into account when managing risks posed by using clinical decision support systems: (1) the type of decision support offered by the tool, (2) how well a tool’s capabilities align with the work to be done, and (3) how well values embedded in a tool align with values held by patients, families, and caregivers subject to outcomes of a tool’s use.4,5,6

Two Types of Decision Support

Decision support systems generally belong in 1 of 2 categories: (1) tools that augment human capabilities and (2) tools that offload (primarily via automation) caregivers’ tasks.4,5,6

Capability-enhancing decision support is analogous to a microscope. The series of lenses in a microscope do not change a user’s perception but enhance a user’s “eye hardware” when applied on a small scale. Digital vital signs monitors, for example, create line plots that augment humans’ abilities to recognize patterns in vital signs data. When properly designed, these tools often make it easier and safer for caregivers and others to maintain attention to their work, and their skill is enhanced by practice and training with the tool.6,7 When tools are designed poorly, however, users have difficulty forming an integrated picture of a situation, which has generated disastrous outcomes in some industries.8

Decision support systems that offload work complete or structure tasks, changing the actual work to be done. Self-driving vehicles or diagnostic systems (such as IBM’s Watson program9), for example, use different forms of machine learning to recognize patterns in data, which, in health care, can inform clinical recommendations and obviate the need for a human to guide or direct task execution. As a result, a human’s role shifts to monitoring and evaluating that system’s output. In health care, these systems can free up clinicians’ time so that, ideally, clinicians might focus more on human dimensions of providing care. But when humans are too far removed from or overly reliant on a system, patient care can suffer. For instance, because automated forms of clinical decision support mostly operate on information mined from a patient’s electronic health record, limitations such as missing data, inadequate sample size, and classification errors can introduce bias into a system’s outputs and thus into clinical recommendations that affect individual patients or entire populations of patients.10

Select the Right Tool

When considering a technological solution to a problem, the choice of tool should be made in light of the work context in which it will be used and not based solely on the tool’s advertised features and functions. If work context is not considered when purchasing a new piece of technology, then the organization runs the risk of the tool not aligning with established workflows and processes, which can introduce new risks to patients and caregivers. One widely recognized failure to ensure appropriate alignment of a tool with the context in which it is used is the design of current electronic health record systems.3 Another example of a tool that relies heavily on its available features and functions—not its use in a particular context—is Google Glass, a wearable display mounted on eyeglass frames that facilitates users’ hands-free internet access, photography, and videography.11 Aside from technical glitches and privacy concerns, some wonder whether this device would help solve a problem in any workplace without further modification12,13 to specifically help accomplish work to be done, avoid errors, and, in health care, avoid being a source of harm to patients or workflow disruption to caregivers.

When humans are too far removed from or overly reliant on a diagnostic system, patient care can suffer.

One way to determine whether and how well a decision support tool helps a caregiver’s work is to rigorously test that tool by simulating conditions that closely mimic actual clinical situations in which that device would be used. Many simulations used by manufacturers to test decision support tools focus primarily on the development of use case scenarios that will portray their tool as effective in so-called ordinary occurrences in which it would be used. This approach to testing can generate unreasonable expectations about a device’s promise, resulting in potentially dangerous mismatches between a device’s intended uses and its actual capacity to help clinicians take care of patients. In contrast to developing use case scenarios that portray the device in a favorable light, simulation testing should be used to reveal when, how, and where a device could fail. In addition to more accurately situating clinicians’ expectations about a device’s limitations and capabilities, this approach can help risk managers shed light on potential hazards and misuses, develop contingency plans, and convey coveted (and not always easily procured) feedback to designers about patients’ outcomes and caregivers’ experiences of device implementation.14

Purpose and Value

From a humanitarian perspective, risk managers should consider the purpose (eg, cost savings, efficiency, accuracy) for which a decision support tool was developed and the corresponding values embedded in the tool. More specifically, risk managers should determine if the purpose of and values informing the system align with those of the patients, families, and caregivers whose lives will be influenced by use of the tool. Given that current computerized systems are limited to processing of symbols (eg, words, numbers, categories), the values that drive decision support are those that correspond to priorities (eg, cost savings) that can be expressed as symbols and that can serve as a scaffold for decision support. Often, however, we tend to be driven by emotions, experiences, and intuitions of which we are not always aware and that do not align with values programmed into computerized systems because they cannot be translated into symbols recognized by a computer program.15

Consider, for example, route selection in a navigation aid, such as Google maps. The primary values that drive route recommendations in this tool include distance from a driver’s location (point A) to a destination (point B) and the time it will take to travel from point A to point B. Currently, however, navigation applications do not account for less easily defined values that frequently guide human navigation behavior, such as scenery-based route preferences. Similar to gaps in values programmed into navigation aids and values held by motorists using them, health care is fraught with cases in which emotional values outweigh efficiencies or savings of “symbol-able” measurables, such as time or money.

Conclusion

Risk managers must consider values that drive engineered systems, note gaps between values expressed by decision support tools’ designs and those expressed through the behaviors of those who use them, and avoid promoting overreliance on decision support tools. It is difficult to know exactly how decision support will be used to facilitate decision making within any given context or to anticipate emergence of behaviors that develop after a decision support system has been integrated into clinical settings. Managing risks introduced by a tool means understanding the type of decision support needed in a specific context, understanding the type of decision support offered by the tool, and recognizing how well the values embedded in the tool align with those of patients and caregivers.

References

-

Berner ES. Clinical Decision Support Systems: Theory and Practice. 2nd ed. New York, NY: Springer Science Plus Business Media; 2007.

-

Sahota N, Lloyd R, Ramakrishna A, et al; CCDSS Systematic Review Team. Computerized clinical decision support systems for acute care management: a decision-maker-researcher partnership systematic review of effects on process of care and patient outcomes. Implement Sci. 2011;6(1):91.

- Wears RL. Health information technology and victory. Ann Emerg Med. 2015;65(2):143-145.

- Miller RA, Masarie FE Jr. The demise of the “Greek Oracle” model for medical diagnostic systems. Methods Inf Med. 1990;29(1):1-2.

- Vicente KJ. Ecological interface design: progress and challenges. Hum Factors. 2002;44(1):62-78.

-

Lintern G, Motavalli A. Healthcare information systems: the cognitive challenge. BMC Med Inform Decis Mak. 2018;18:3.

- Staszewski JJ. Models of human expertise as blueprints for cognitive engineering: applications to landmine detection. Proc Hum Factors Ergon Soc Annu Meet. 2004;48(3):458-462.

-

Endsley MR. Designing for situation awareness in complex systems. In: Proceedings of the Second International Workshop on Symbiosis of Humans, Artifacts and Environment; November 2001; Kyoto, Japan:1-14.

- Chen Y, Elenee Argentinis JD, Weber G. Watson IBM: how cognitive computing can be applied to big data challenges in life sciences research. Clin Ther. 2016;38(4):688-701.

- Gianfrancesco MA, Tamang S, Yazdany J, Schmajuk G. Potential biases in machine learning algorithms using electronic health record data. JAMA Intern Med. 2018;178(11):1544-1547.

-

Google. Glass Enterprise Edition 2: tech specs. https://www.google.com/glass/tech-specs/. Accessed April 20, 2020

- Muensterer OJ, Lacher M, Zoeller C, Bronstein M, Kübler J. Google Glass in pediatric surgery: an exploratory study. Int J Surg. 2014;12(4):281-289.

-

Sawyer BD, Finomore VS, Calvo AA, Hancock PA. Google Glass: a driver distraction cause or cure? Hum Factors. 2014;56(7):1307-1321.

- Garg AX, Adhikari NK, McDonald H, et al. Effects of computerized clinical decision support systems on practitioner performance and patient outcomes: a systematic review. JAMA. 2005;293(10):1223-1238.

-

Klein GA. Streetlights and Shadows: Searching for the Keys to Adaptive Decision Making. Cambridge, MA: MIT Press; 2011.